Networking, as we all know, is the backbone of any modern technology. It allows communication between devices and applications, whether you are sitting next to each other or on opposite sides of the world. It's all about connecting things. If we talk about it in the context of Kubernetes, it is even more important, because Kubernetes is a container orchestration platform, which means it's responsible for managing and scaling a large number of containers running in a cluster.

These containers need to be able to communicate with each other, as well as with the other parts of the infrastructure, such as external services and databases. Kubernetes networking allows this communication to happen seamlessly and securely. What makes Kubernetes network a challenge, is that it’s a very distributed network. There are a ton of “micro applications” running inside a cluster, and they all need their separate network configurations.

The Need for Networking

Before getting onto the way networks are handled in a cloud-native system such as Kubernetes, let us first try and understand why we even require robust networking.

Let’s say that we have a particular device. Now this device could be absolutely anything. It could be your Phone, your laptop, your smart TV, smart bulbs, or anything else. Every single one of these devices has its little internal network, and we may not have direct access to a device's internet network. And that's because it's a very highly customized and tuned network. After all, that network acts as a bus, where multiple components communicate with each other to effectively give some sort of output.

Now, what do I mean by these highly customized networks? Imagine an entire smart home setup. What are all your smart bulbs and smart TVs connected with? The answer is a network. It could be a wireless or a wired network. These networks have been configured in a very special way so that all these different devices can connect, and work seamlessly.

Let’s take another example. What happens when you access a YouTube video on your phone? Behind the scenes, there might be some inter-process communications going on. And it's happening in its little network before all of this data gets translated into a proper video format that you can understand. And this is the output that we see on a YouTube video.

So, once we go beyond our phone or the home network, we have different devices that need to be able to communicate with each other. And over nearly four decades worth of innovation, we've seen how networks have evolved and gone from being public, to private, and then again from private to public. But what are these special kinds of devices that enable us to communicate with people globally? That’s where we have some kind of an Intermediate Device that can help enable this kind of wide-area communication.

The Role of Intermediate Devices

Your device right now is connected to some form of network. It might be a wireless network such as your cellular network which provides 5G connections, or it might be your home Wi-Fi network. What ends up happening is your device ends up talking to some sort of core device that knows how to get you and your device to other networks, to other locations, to other destinations, because your device doesn't know about the rest of the network.

There could be an entire big world beyond your device but it doesn't know about this global scale. So there are devices in between your device whether it’s your phone, your laptop, your TV, or anything else. The service that you are trying to access and those intermediary devices are moving your requests throughout the network to make sure they get to their destination in the fastest manner possible while still providing you with the result on time.

When it comes to these intermediary devices, the physical devices that exist at data centres, in cloud environments, at points of presence (POPs) or service provider locations they control a big aspect of the internet. Now, when we think about networks and the connectivity streams themselves, it's very, challenging to navigate or build on top of those existing networks because there are so many different constraints we have to consider. We need to consider factors such as what the physical world is doing, or the way the physical world moves our packets around.

Now, going back to those intermediary devices, let's call them routers and switches because they're effectively the ones responsible for moving packets around very quickly and knowing how to decide where to move those packets to is effectively the function of a router. A router is usually paired up with a switch so we can connect even more devices to a network.

Cloud Native networks

Now, let’s talk a bit about how networks are being handled in the cloud-native systems. When we get into the cloud native landscape, there are several different areas that we want to focus on. Those several different areas still depend on the physical network.

We talked about the importance of various devices such as routers and switches in a physical network, and how it connects the entire world. But now, let’s take a look at the cloud native and Kubernetes ecosystem.

Let’s say you want to provision a Kubernetes cluster on a cloud provider such as AWS, GCP or Civo. What happens when you press the “create cluster” button? What you can see, is the state of the cluster, and its nodes. We can’t see what’s going on in the background. However, if we dig around the cloud dashboards a bit, we can see exactly what’s going on.

The cloud provider is creating a couple of VMs, which will act as nodes for your clusters. It might also create some storage volumes depending on how you configured your cluster. And there might even be a brand new load balancer created. That’s all well and good, but the question that we have to ask is, how are all these different resources getting connected into this single Kubernetes cluster?

We already saw that we need to connect these tons of physical devices to create a network. So how is Kubernetes able to do it in such an automated way? If it needs physical networks to connect everything, then where are those physical devices that are connecting the cluster? Well, the answer is, there aren’t any physical devices. But the clusters are connected somehow.

Virtual Networks

This is where the entire concept of virtual networks comes into play. Before diving into virtual networks, let’s come back to the concept of virtualization. We know that Virtual machines and containers are created by a neat technology called as virtualization. This lets us split one physical computer into multiple virtual computers. You can think of it as a way to create multiple computers from a single computer’s hardware.

So, taking a short look at how virtualization is achieved. We have a host computer, i.e the physical computer. We install a piece of software on top of the physical computer which we call a Hypervisor. This hypervisor is what we use to create hundreds of virtual machines or containers i.e. split the physical computer into multiple sub-compters.

Virtual networks follow the same concept. Instead of using multiple physical routers or physical switches, you can simply use one single powerful router or switch and split this into multiple smaller ones using virtualization. Now using these virtual networks, we can create hundreds or thousands of other networks to work with.

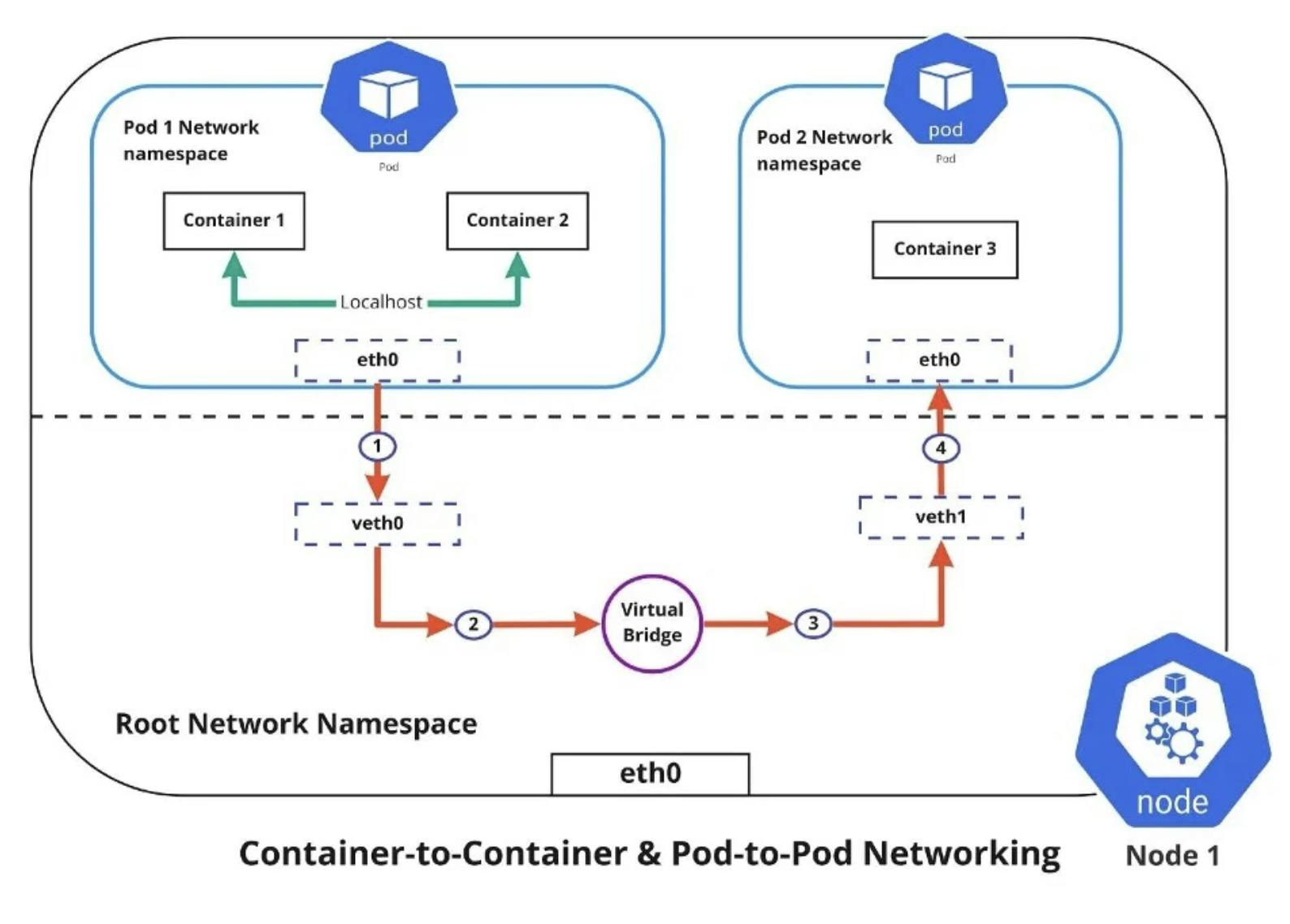

Now, before we look at the networks in a Kubernetes cluster, we need to look at one more thing. The Linux Kernel has an amazing feature called namespaces which essentially allows us to isolate processes. This is what containers use. Now, to separate networks, we have a network namespace as well. This simply separates the network, so on a single Linux host, you could have a lot of separate networks. All of these networks can have their own routers, switches, and other network interfaces. From a networking standpoint, every single container is just a network namespace

Now, we know that Kubernetes is a container orchestrator which means that at the end of it day, its job is to run containers. We also know that Kubernetes runs the containers inside tiny pods. For a Kubernetes environment, these pods are what we will be calling as the network namespaces.

One thing that we need to highlight is that the entire paradigm of virtual networks is completely software-defined. That means we can very easily automate their creation, in contrast to if we were using physical interfaces to connect everything. That is where virtual networks are such a big and important aspect of Kubernetes and connecting the world.

Alright, so we have now created the virtual network namespaces and now the pods inside the cluster have a network that can connect to other networks. But how do we make sure that all these network namespaces connect to the correct networks? We already know that containers are ephemeral which means after some time, they will get destroyed and recreated. After they get recreated, the IP address assigned to them changes.

Since the IP address changes, we most certainly cannot use the IP address to define which other networks should they connect to. So, we’re going to need a DNS service within our Kubernetes cluster.

DNS

Before diving into DNS, let’s take a bit of an analogy. We all have phones. How do we make a phone call to another person? We have to dial their phone number. Now imagine if you had 100 friends who you talk with. You would need to memorize 100 different phone numbers. And even if you were able to do that, there is a chance that you enter a wrong phone number. So instead we have something like a phonebook, where we save the phone numbers along with the contact name.

A DNS works in the same way as a phonebook. It stores a bunch of IP addresses and assigns the proper names to those addresses. Let’s say you wanted to head over to google.com. You enter the URL in your browser and hit ENTER and the page loads. That’s great and all but what just happened?

On the backend, your browser saw that you wanted to go to google.com, but it did not know what’s the IP address of this URL. So what it does, is it looks up the IP address of the entered URL in a DNS server. From there, it will match the URL with its IP address and once your browser knows which IP address google.com exists, it will take you there.

Since in a Kubernetes environment everything is ephemeral, relying on hardcoded IP addresses will not work. Hence we need a DNS service that can automatically detect the newly created networks and assign them the proper name. In a Kubernetes cluster, you would usually see CoreDNS as the most common DNS service. However, DNS is only part of the story when it comes to enabling the pods to communicate. The other half of the story is Kubernetes Services. First, let’s take a look at what is a Container Networking Interface (CNI).

Container Networking Interface (CNI)

In the previous section, we mentioned that the DNS services make sure that the recreated network is reachable. But when this network is created or re-created, what assigns it the IP address in the first place?

To create a standardized way to handle networks in Kubernetes, there was a specification which was introduced called as the Container Networking Interface(CNI). This specification is just a bunch of rules and principles that need to be followed when we create a networking solution for a Kubernetes cluster.

Various CNI plugins have been created and refined over the years. Some of the popular examples of these CNI plugins include Calico, Cillum and Flannel. They all do essentially the same tasks, but each one of these CNI plugins has its way of doing things and might provide different features.

Kubernetes Services

Now, for the Kubernetes pods to be able to communicate with each other, we need to expose those individual pods through Kubernetes objects that we call a service. In the most simple terms, a service is a way to enable the pod to communicate either with the outside world or with other pods. There are a total of three main types of services. Let’s take a look at them in brief.

ClusterIP: A clusterIP service is just a way to allow pods to communicate with other pods inside the cluster. It’s an internal network for the pods.

NodePort: NodePort just ties your pod to a specific node. This can be limiting because your nodes can come and go pretty easily, and you don't want to be in a situation where you lose a node. If that happens, you've lost access to your app.

Loadbalancer: A load balancer will just decide how to distribute the incoming traffic across the available Kubernetes nodes. Duplicates of one pod can exist on different nodes as well, and a load balancer will help distribute the traffic among those copies.

A service in Kubernetes is an actual way to translate DNS. It's not directly called DNS, but it's a way to translate DNS into pod IPs. They use a concept of labels and selectors to effectively map the service to specific pods that you might have deployed.

Once we have these pieces in the mix, we’ve got pretty much everything we need to get going for a Kubernetes cluster. There are still a few elements that we are missing for security considerations. And that is where we would use things like a Service Mesh, or an API gateway. They are all huge topics in themselves, so let’s take a high-level overview of them.

Service Mesh

Before delving into service mesh, it's essential to understand how applications communicate. Typically, we interact with applications via HTTP rather than directly pinging their IP addresses. HTTP is simply a protocol that you can use for communications. Service A communicates with Service B, often using HTTP requests like GET to retrieve data. This communication can involve other protocols, but HTTP is predominant.

HTTP operates at layer 7 of the OSI model, encompassing all network layers, from the physical to the application layer. While the network layer primarily focuses on routing, it lacks the intelligence necessary for sophisticated operations.

Layer 7 offers substantial benefits, such as the ability to pass authentication tokens, filter requests, and even control access through rate limiting. Filtering at the IP or hostname level, or lower layers, is limited in this regard. Layer 7 also introduces identity and authorization, enhancing security.

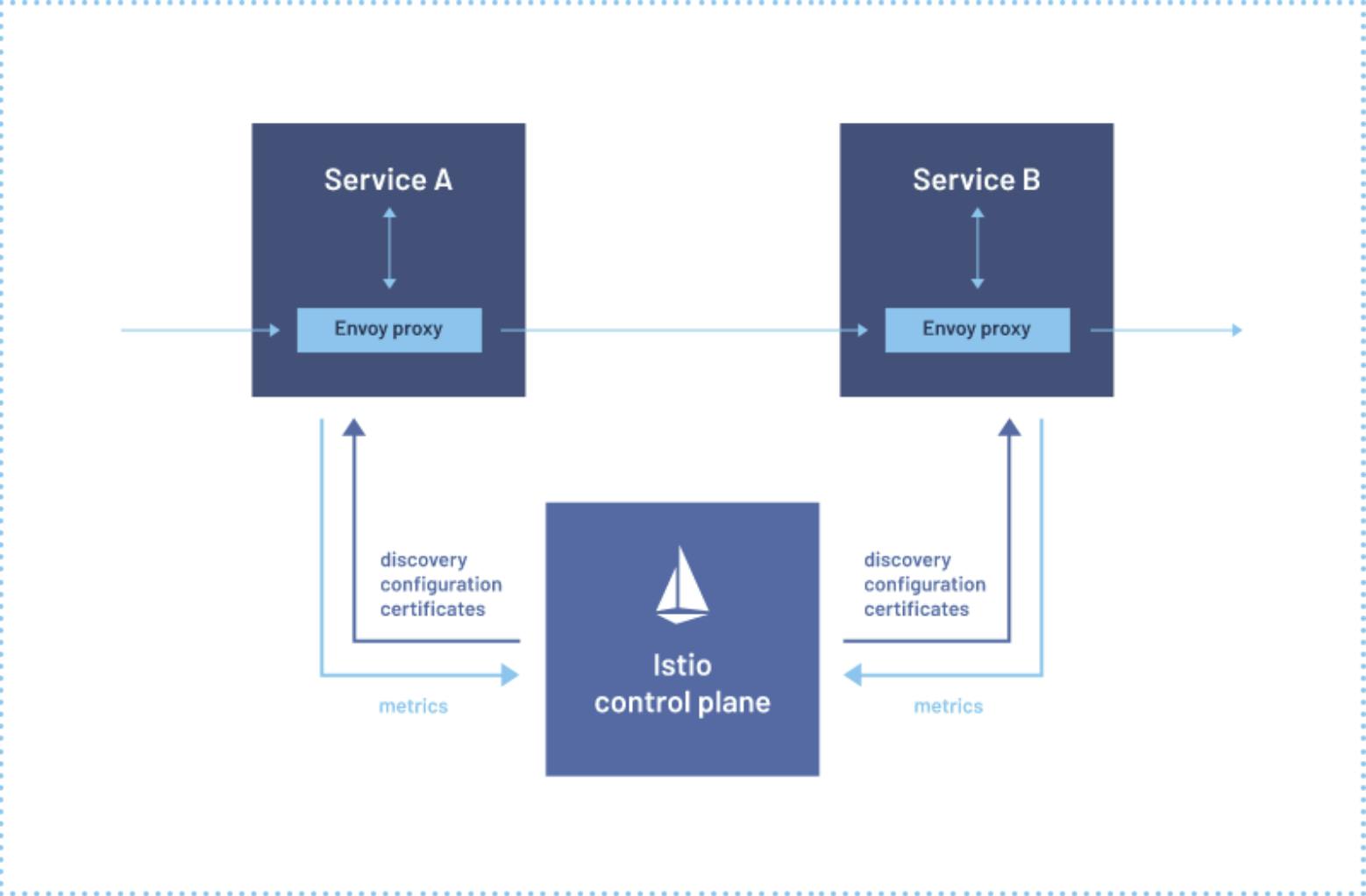

Service mesh builds on these concepts, deploying a control plane that interacts with the Kubernetes control plane and influences the architecture. The data plane, existing as proxies within Kubernetes, facilitates routing, filtering, and authorization. An ingress gateway (incoming traffic) and an egress gateway (outgoing traffic) control traffic in and out of the cluster, while mini proxies within the cluster manage individual services.

Service mesh not only adds intelligence to routing but also assists with blue-green deployments, canary launches, and resilience. It enables circuit breaking to mitigate failures within an application, ensuring partial functionality. Kubernetes takes charge of reconciliation, automatically correcting deviations from the desired state.

Kubernetes communicates with cloud providers' APIs to handle node failures, reprovisioning nodes when needed. This process automates networking, from the physical layer to the service mesh, offering a seamless experience. This automation extends to cloud providers like AWS, where clusters, load balancers, and EC2 instances are provisioned behind the scenes.

API Gateway

An API gateway typically sits atop a load balancer, where the load balancer listens for external requests while the API gateway handles request processing logic. This concept predates Kubernetes and is versatile, and applicable to various environments, including VMs, functions, containers, or external entities.

The API gateway serves as the primary entry point to access APIs, similar to a service mesh but centralized at the cluster or environment edge. In essence, a service mesh is an evolution of the API gateway, running universally across all workloads and offering the same capabilities.

As your needs evolve, transitioning from an API gateway to a service mesh may be necessary, especially when dealing with intricate service-to-service communication and dependencies.

In today's Kubernetes-centric landscape, service meshes like Istio accommodate both VM and Kubernetes workloads, serving as the API front end for both.

However, in purely virtual machine environments, sticking with API gateways might be more fitting due to service coupling and functionality considerations.

API gateways provide additional features like rate limiting, which ensures that services don't get overwhelmed by limiting the number of incoming requests over time. This prevents service outages caused by a sudden influx of requests.

Additionally, API gateways act as front doors and can function as web application firewalls, filtering inbound traffic based on policies. They can also handle TLS termination and authentication, simplifying the authentication process by connecting with other systems seamlessly.

Conclusion

In summary, networking is the backbone of modern technology, enabling communication across devices and applications. Within Kubernetes, a dynamic container orchestration platform, networking takes centre stage, allowing containers to interact seamlessly. This article explores the critical role of networking in Kubernetes and the solutions it employs.

Kubernetes faces the challenge of managing myriad microapplications, each with unique network requirements. To address this, it harnesses virtual networks and Container Networking Interfaces (CNI) for automated network management. These virtual networks adapt to the ever-changing container landscape, ensuring uninterrupted communication.

DNS services are crucial for translating human-readable URLs into IP addresses and facilitating pod communication within clusters. Kubernetes services, including ClusterIP, NodePort, and LoadBalancer, further enhance connectivity for internal and external traffic.

To address advanced networking and security needs, Kubernetes offers service meshes and API gateways. Service meshes like Istio enable intelligent routing, filtering, authorization, and support features like blue-green deployments. API gateways serve as centralized entry points, handling tasks like rate limiting, web application firewall functionality, TLS termination, and authentication.

As Kubernetes continues to shape the cloud-native landscape, mastering its networking intricacies is pivotal for organizations seeking reliability, security, and scalability in their applications and services.

Thanks for reading!

If you liked this blog, feel free to share it with your fellow companions and stay updated with the latest developments in Cloud Native networking by following us on Twitter at EmpathyOps and checking out our website!